8 ways to improve ASP.NET Web API performance

ASP.NET Web API is a great piece of technology. Writing Web API is so easy that many developers don’t take the time to structure their applications for great performance.

In this article, I am going to cover 8 techniques for improving ASP.NET Web API performance.

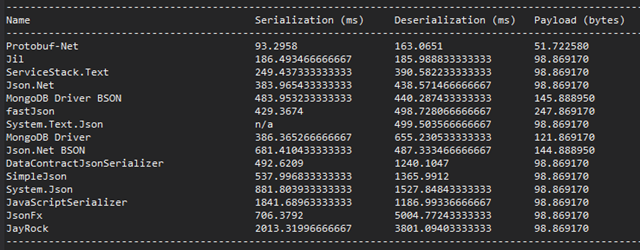

1) Use fastest JSON serializer

JSON serialization can affect overall performance of ASP.NET Web API significantly. A year and a half I have switched from JSON.NET serializer on one of my project to ServiceStack.Text .

I have measured around 20% performance improvement on my Web API responses. I highly recommend that you try out this serializer. Here is some latest performance comparison of popular serializers.

UPDATE: It seams that StackOverflow uses what they claims even faster JSON serializer called Jil. View some benchmarks on their GitHub page Jil serializer.

2) Manual JSON serialize from DataReader

I have used this method on my production project and gain performance benefits.

Instead reading values from DataReader and populating objects and after that reading again values from those objects and producing JSON using some JSON Serializer, you can manually create JSON string from DataReader and avoid unnecessary creation of objects.

You produce JSON using StringBuilder and in the end you return StringContent as the content of your response in WebAPI

var response = Request.CreateResponse(HttpStatusCode.OK);

response.Content = new StringContent(jsonResult, Encoding.UTF8, "application/json");

return response;

You can read more about this method in this blog post Manual JSON serialization from DataReader in ASP.NET Web API

3) Use other formats if possible (protocol buffer, message pack)

If you can use other formats like Protocol Buffers or MessagePack in your project instead of JSON do it.

You will get huge performance benefits not only because Protocol Buffers serializer is faster, but because format is smaller than JSON which will result in smaller and faster responses.

4) Implement compression

Use GZIP or Deflate compression on your ASP.NET Web API.

Compression is an easy and effective way to reduce the size of packages and increase the speed.

This is a must have feature. You can read more about this in my blog post ASP.NET Web API GZip compression ActionFilter with 8 lines of code.

5) Use caching

If it makes sense, use output caching on your Web API methods. For example, if a lot of users accessing same response that will change maybe once a day.

If you want to implement manual caching such as caching tokens of users into memory please refer to my blog post Simple way to implement caching in ASP.NET Web API.

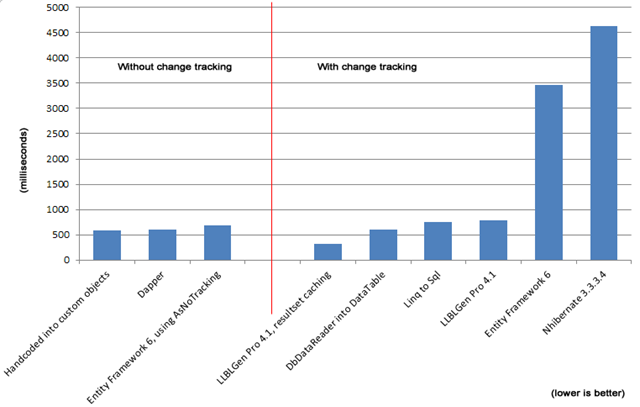

6) Use classic ADO.NET if possible

Hand coded ADO.NET is still the fastest way to get data from database. If the performance of Web API is really important for you, don’t use ORMs.

You can see one of the latest performance comparison of popular ORMs.

The Dapper and the hand-written fetch code are very fast, as expected, all ORMs are slower than those three.

LLBLGen with resultset caching is very fast, but it fetches the resultset once and then re-materializes the objects from memory.

7) Implement async on methods of Web API

Using asynchronous Web API services can increase the number of concurrent HTTP requests Web API can handle.

Implementation is simple. The operation is simply marked with the async keyword and the return type is changed to Task.

[HttpGet]

public async Task OperationAsync()

{

await Task.Delay(2000);

}8) Return Multiple Resultsets and combined results

Reduce number of round-trips not only to database but to Web API as well. You should use multiple resultsets functionality whenever is possible.

This means you can extract multiple resultsets from DataReader like in the example bellow:

// read the first resultset

var reader = command.ExecuteReader();

// read the data from that resultset

while (reader.Read())

{

suppliers.Add(PopulateSupplierFromIDataReader( reader ));

}

// read the next resultset

reader.NextResult();

// read the data from that second resultset

while (reader.Read())

{

products.Add(PopulateProductFromIDataReader( reader ));

}

Return as many objects you can in one Web API response. Try combining objects into one aggregate object like this:

public class AggregateResult

{

public long MaxId { get; set; }

public List<Folder> Folders{ get; set; }

public List<User> Users{ get; set; }

}

This way you will reduce the number of HTTP requests to your Web API.

Thank you for reading this article.

Leave a comment below and let me know what other methods you have found to improve Web API performance?

khorvat2

July 16, 2014 at 4:30 pm (10 years ago)Nice, really nice we use some of these libraries so we can try some of your suggestions. Thanks

Radenko Zec

July 16, 2014 at 4:39 pm (10 years ago)Thanks Kristijan. If you get stuck somewhere feel free to contact me.

Acesyde

July 16, 2014 at 9:12 pm (10 years ago)Very nice, thanks for that !

Radenko Zec

July 16, 2014 at 9:21 pm (10 years ago)Thanks for comment.

cubski

July 17, 2014 at 4:16 am (10 years ago)Great post Radenko! Looking forward to future posts.

Radenko Zec

July 17, 2014 at 5:37 am (10 years ago)Thanks.

B. Clay Shannon

July 21, 2014 at 3:52 pm (10 years ago)In tip #2, where/how is jsonResult declared/initialized?

Radenko Zec

July 21, 2014 at 7:29 pm (10 years ago)Hi Clay. That is a good question. I have extracted part of WestWind library described in this blog post http://weblog.west-wind.com/posts/2009/Apr/24/JSON-Serialization-of-a-DataReader

This library handles JSON creation as string for every data-type in SQL Server using StringBuilder.

You could use same part of that library or write your own implementation.

Radenko Zec

October 17, 2014 at 8:24 am (10 years ago)I have just written another blog post that handles your question http://blog.developers.ba/manual-json-serialization-datareader-asp-net-web-api/

Zias Zias

July 22, 2014 at 12:24 pm (10 years ago)nice!

Radenko Zec

July 22, 2014 at 12:54 pm (10 years ago)Thanks.

dotnetchris

July 22, 2014 at 5:42 pm (10 years ago)Note that 7) Implement async on methods of Web API

will likely LOWER performance. It should increase THROUGHPUT however.

Nothing can ever be faster than synchronous code. It’s certainly not efficient however.

Radenko Zec

July 22, 2014 at 7:26 pm (10 years ago)Hi Chris. Thanks for comment. You are totally right, throughput will be increased. In this blog post you have some benchmarks how much you can gain by implementing async on methods http://robinsedlaczek.wordpress.com/2014/05/20/improve-server-performance-with-asynchronous-webapi/

Vedran Mandić

July 23, 2014 at 2:22 pm (10 years ago)Nice! Very smart and simple performance tips Radenko. You write an interesting blog, I will be reading you definately. Cheers.

Radenko Zec

July 23, 2014 at 2:43 pm (10 years ago)Thanks Vedran. You can use this link http://eepurl.com/ZfI8v to subscribe to this blog and make sure you don’t miss new upcoming blog posts.

Vedran Mandić

July 23, 2014 at 2:50 pm (10 years ago)Thanks!! Done.

Allan Chadwick

July 23, 2014 at 5:10 pm (10 years ago)I don’t think point 6 is fully accurate. Since ORM’s, specifically Entity Framework, can consume stored procedures with the same performance as Classic ADO, the point should be to use stored procedures and avoid dynamically created SQL. Entity Framework and other ORMs are still worth using for their code generation, consistency in the dal layer, and general popularity decreasing cost of ownership. http://stackoverflow.com/questions/8103379/entity-framework-4-1-vs-enterprise-data-application-block-maximum-performance

Radenko Zec

July 23, 2014 at 5:46 pm (10 years ago)Hi Allan.Thanks for comment. I am not sure that it is worth using EF if you will use it only to consume stored procedures.

If you don’t use some other features like change-tracking or LINQ I would always choose Classic ADO.NET.

But that is my opinion. Someone else would maybe choose EF or some other ORM.

Allan Chadwick

July 23, 2014 at 6:53 pm (10 years ago)I don’t think enough people realize that LINQ in EF is optional. EF is still a quick code generator for your database which will get you wired up more quickly then hand writing ADO. Although I can’t blame anybody for avoiding the EF ‘Wizard’ experience or the initial learning curve without any benefit. 🙂 As my buddy told me once.. “It’s the future!” lol.

tw37

July 24, 2014 at 5:27 am (10 years ago)Good tips Radenko. We happen to run an Open BoK (Body Of Knowldge) called practical performance analyst. Take a look at what we do at practicalperformanceanalyst.com and let me know you are keen to come and help.

Evgeny

July 28, 2014 at 8:53 am (10 years ago)Good post!!!

great tips!!!

thank you.

Radenko Zec

September 2, 2014 at 5:45 am (10 years ago)Thanks Evgeny.

Wil

September 1, 2014 at 10:17 pm (10 years ago)Hi Radenko,

Great post!

I came across it just at the right time in my project. I have however decided to keep EF in my project for my internal business logic (i.e. small volume returns) as I like the code first method but use the old datastream methods for the big database hits. Just wondering if anyone else had experience using a mix of EF and classic ADO.net in the same project?

Cheers,

Wil

Radenko Zec

September 2, 2014 at 5:49 am (10 years ago)Hi Wil. Thanks for comment. It seams very reasonable to use classic ADO.NET only when is necessary.

I have also done it similar way on some of my projects.

ali

September 17, 2014 at 3:06 am (10 years ago)You could also use DataBase.SqlQuery which uses raw SQL and materialize objects for you.

Mariusz Zaleski

December 17, 2014 at 4:41 pm (10 years ago)hardcore but nice 🙂

Radenko Zec

December 17, 2014 at 5:40 pm (10 years ago)Thanks Mariusz 🙂

Jerry Hewett

February 26, 2015 at 7:49 pm (9 years ago)Really wished this all worked as advertised, but it doesn’t.

http://stackoverflow.com/questions/28402460/web-api-issue-with-sending-compressed-response

The Content-Encoding value of “gzip” is in there until the response is delivered to the client. Then something in the underlying brain-damaged ASP .Net Web API strips it out.

I’ve wasted almost a week on this. Not too damned happy about it, either.

Jerry (Not A Happy Camper) H.

Radenko Zec

February 26, 2015 at 8:20 pm (9 years ago)Hi Jerry.

I am sorry you wasted almost a week on this.

I haven’t worked with gzip for a few months but:

Web Browser automatically decode gzip and deflate responses.

It is possible that your response is already decompressed by browser.

You should check in Reponse-Header if content-encoding is gzip or not.

Also is possible that fiddler / firebug / dev tools automatically decompress response.(cannot remember)

Easy way to check if your Web API is compress correctly is to call it from simple console / win forms app using HTTPClient.

Than you will see will response be gzipped or not.

Please make sure you haven’t set auto decompress in httpClient like this :

var client =

new HttpClient(

new HttpClientHandler

{

AutomaticDecompression = DecompressionMethods.GZip

| DecompressionMethods.Deflate

});

This will auto-decompress gzip/ deflate responses.

Jerry Hewett

February 26, 2015 at 9:07 pm (9 years ago)I’ve even tried changing the Content-Type to ones that are part of the IIS configuration (all of this below) and it still doesn’t work, so at this point I’m pretty much ready to abandon this API.

However, all of this works just fine if I’m *NOT* using the ASP .Net Web API — I’ve had an ASMX in production for the past four years that does essentially the same thing for dynamic content (JS, CSS, etc.) using pretty much the same code for GZIP (and settings the headers, etc., etc), and it’s been working flawlessly.

So at this point I’m pretty sure it isn’t the server configuration that’s the problem. The problem is something in the ASP .Net Web API.

Jerry H.

——–

Request Header:

Host localhost

User-Agent Mozilla/5.0 (Windows NT 6.3; WOW64; rv:33.0) Gecko/20100101 Firefox/33.0

Accept */*

Accept-Language en-US,en;q=0.5

Accept-Encoding gzip, deflate

Referer http://localhost/jsontest.html

Connection keep-alive

Response Header:

HTTP/1.1 200 OK

Cache-Control private

Transfer-Encoding chunked

Content-Type application/x-javascript

ETag “3FC71”

Vary Accept-Encoding

Server Microsoft-IIS/8.5

Access-Control-Allow-Headers Content-Type

Access-Control-Allow-Origin *

X-AspNet-Version 4.0.30319

X-Powered-By ASP.NET

Date Thu, 26 Feb 2015 20:54:31 GMT

applicationHost.config:

Radenko Zec

February 26, 2015 at 9:23 pm (9 years ago)Hi Jerry.

I have enabled deflate compression on ASP.NET Web API and have this in production environment on IIS without any issues.

Try first to call web api from simple console application to check if response is delivered gzipped.

Jerry Hewett

March 6, 2015 at 3:49 pm (9 years ago)Turns out it’s an issue with Windows — or, more likely, one of Microsoft’s new “security by obscurity” updates:

http://stackoverflow.com/questions/13516844/

I’ve tried a few tests, and the issue seems to occur when the response is passed through one of Microsoft’s upper-level socket layers. I can get back a compressed response with the correct headers if I use wget, but the information is stripped out if I try it from a (any!) web browser.

Eduardo Cucharro

April 23, 2015 at 4:49 pm (9 years ago)Awesome tips! Thank you for sharing!

Radenko Zec

April 23, 2015 at 5:15 pm (9 years ago)Thanks Eduardo.

yaron levi

April 27, 2015 at 10:47 am (9 years ago)Great ! thank you

Radenko Zec

October 28, 2015 at 6:55 am (9 years ago)Thanks for your comment.

joy

August 24, 2015 at 3:11 am (9 years ago)very useful

Radenko Zec

October 28, 2015 at 6:55 am (9 years ago)Thanks

abatishchev

September 7, 2015 at 6:48 pm (9 years ago)I would strongly disencourage from #6.

Herbey Zepeda

January 9, 2017 at 1:32 am (8 years ago)why?

abatishchev

January 9, 2017 at 8:35 am (8 years ago)ORMs are out there on purpose. One can achieve highly optimized and well performing code even with EF et al. Another option would be OData which is translated directly to SQL and bypasses extra layers of indirection.

The problem with ADO.NET is in the amount of boilerplate code which ones would very unlikely to cover by tests thus it’s errorprone. If okev really has resources to write tests for it then there is a better way to spend these resources.

TravisO

February 6, 2018 at 3:09 pm (6 years ago)The goal of ORMs are about abstraction, not performance. As any benchmark shows, EF is the worst performing ORM on the market. For most projects it’s fine, but if you need extreme performance then any ORM is a bad idea. Your EF advice is completely flawed and you need to actually read up before making claims

abatishchev

February 6, 2018 at 5:34 pm (6 years ago)You’re talking to an expert in EF fine-tuning and optimization. I led a team that created highly-loaded and in the same time scalable, reliable, and resilient system. But yeah, my advice is flawed and I need to read up before making claims.

TravisO

February 6, 2018 at 6:07 pm (6 years ago)I’ll let the numbers disprove you, a quick Google finds this

Summary: EF is 3x-7x slower than a simple ADO.Net query on EF 6.x

http://www.groovycoder.net/2015/11/09/orm-performance-of-entity-framework-and-dapper-in-comparison-to-ado-net-as-of-2015/

abatishchev

February 6, 2018 at 6:47 pm (6 years ago)Did I say anywhere that EF is the fastest ORM for .NET out there? Never.

I said that one can continues to use ORM with its benefits and achieve good performance in the same time.

Yes, stripping away ORM might/will gain performance. But for regular users the cost of doing this right plus loosing the said benefits would be too high. Don’t try at home/proceeds with caution.

Coder Absolute

October 28, 2015 at 2:25 am (9 years ago)Excellent article, very precise! Thanks for taking a time to write! 🙂

Radenko Zec

October 28, 2015 at 6:54 am (9 years ago)Thanks.

Sander Wollaert

October 29, 2015 at 3:43 pm (9 years ago)I’ve created a blog post about adding Protocol Buffer to ASP.net WebApi https://swollaertblog.wordpress.com/

Radenko Zec

October 30, 2015 at 6:59 am (9 years ago)Thanks for great blog post.

Matt Watson

November 27, 2015 at 3:02 pm (9 years ago)These are all great tips! I did a whole article on just tips for JSON you might be interested in: http://stackify.com/top-11-json-performance-usage-tips/

Also, as you are making performance improvement, be sure to use an APM solution so you can track if your changes are improving load times, reducing cpu, etc. They can also help you find slow database queries, cache calls, view logging, find errors, etc.

Thanks Matt with Stackify APM (http://www.stackify.com)

Alaa Albeteihi

February 13, 2016 at 5:59 pm (8 years ago)Great Tips!

Guru Pitka

May 6, 2016 at 6:29 am (8 years ago)Another life saver if you’re using HttpClient, use a single instance. see this thread for more info: http://stackoverflow.com/questions/22560971/what-is-the-overhead-of-creating-a-new-httpclient-per-call-in-a-webapi-client/22561368#22561368

Emad Syed

March 15, 2017 at 12:07 pm (7 years ago)Hi, I switched from asmx services to webapi for a running project and was fascinated with its advantages over the previous one. The client was android and the solution is deployed for a particular set of users (not on play store). The server CPU choked within second to be specific 13k users got new application out of 30k. This new build also has HTTPS and SSL pinning implemented. Previous version used to call say 5 methods one by one to complete a transaction, now the new approach takes all input and sends on request to server. I need your help and guideline about the probable cause of this issue. Is my approach ok to follow i.e multiple methods vs single method?

—

Thanks

Radenko Zec

March 15, 2017 at 12:12 pm (7 years ago)Hi there. It is hard to say what is the problem without seeing actual code. You can sent me a email via contact form on this website to discuss in more detail about the problems you have.

Emad Syed

March 15, 2017 at 3:35 pm (7 years ago)Hi, thanks for replying. I implemented the web api in existing webservice. By running load test from jmeter and some posts, I am observing blocking on requests. The requests seem to execute one after another. I am also reading some solution to disable session state but do not know how to do it here.