Little known ways to cheat duplicate content detection

The Web is a strange place. Googlebot is an even stranger piece of software.

I consider Google as a typical software company.

In my vision of Google person A from India has worked there before x years and written some small part of Googlebot. Today nobody, not even that person A knows what that piece of code actually do.

Googlebot has many parts written by many persons like person A.

The best way to keep your software secret is to not know how your software actually does the job.

A lot of content on the web is duplicate content. For example, if you plan to run a site about Quotes you will always use someone else’s words.

Many of these Quotations will probably be found on some other sites. Will Googlebot detect your content as duplicate?

Yesterday some strange thing come into my mind. Is there a possibility that someone takes your original content, publish it on its own website and in the eyes of Google become the original author of the content and your content becomes duplicate content?

Here is the text from the Google article “How Google Search Works”:

Google’s crawl process begins with a list of web page URLs, generated from previous crawl processes, and augmented with Sitemap data provided by webmasters. As Googlebot visits each of these websites it detects links on each page and adds them to its list of pages to crawl.

https://support.google.com/webmasters/answer/70897?hl=en

If you want to learn more about SEO from real experts I can recommend this great book:

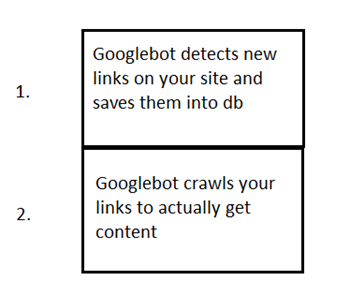

How Google crawls low authority websites (my opinion)

Let’s explain the picture above. So if your site does not have very high page rank, Googlebot will probably come every day and only crawl list of fresh links on your site. After that it will probably fetch the content from some older links which were detected in previous days.

Even if Googlebot does not detects fresh links on your website same day, you can :

- Post it on Google+ (some people say that this will auto-save fresh link in Google DB)

- Manually submit links using “fetch as Google”

- Update your sitemap(Google will probably checks sitemap every day because this is not to expensive operation)

How someone can cheat Googlebot and publish duplicate content as original

Following these steps someone can maybe cheat Googlebot.

- Day1 : Publish dummy links without any meaningful anchor text and with dummy content and try to get Googlebot to crawl them using some of the methods described above (for example: site.com/FunnyQuotes/2413)

- Day2: Find a very popular site that publishes original content every day. Copy original content from that site and update content on yesterday created links (which had a dummy content) with this new original content (from high rank website).

What I think will happen :

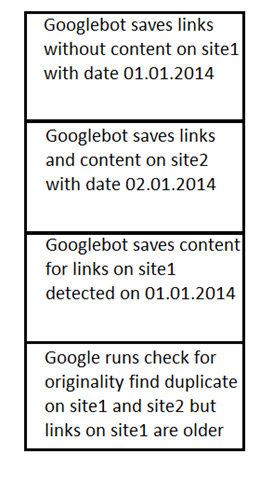

So Googlebot will first detect links on your site. Because your site has lower page rank it will not crawl for content right away.

After this Googlebot will detect links with the content on the popular website. It will crawl content with links because this site has high page rank.

After a few days Google will also crawl content on your links and save it into Google DB. Somewhere between one and six months, Google will run an originality check in the same niche and it will detects that your site1 and site2 have same content.

If your site has older links in Google DB, Google will probably realize that you had original content even this is not true.

This way you will not only increase your website page rank but also decrease competitor website page rank.

Tell me what do you think about this theory? Is there a way to protect your site against this?

wingi

June 27, 2014 at 7:04 am (10 years ago)Hoping to force duplicate content warning on high traffic pages could be dangerous. If it happens, they will sse it in her webmaster tools and react. But if you pick up only one news per news provider it could work.

The timing issue can be tested on your site, check the freq. of google bot requests on sitemap and new pages … but you will get no new backlinks, better ranking – so why?

Radenko Zec

June 27, 2014 at 7:19 am (10 years ago)Dear wingi. Thanks for your comment. You advice to monitor freq. of GoogleBot requests is very good. Backlinks are not that important as before few years. Googlebot now really watches for original high quality content that is published regularly (every day) . If you have some good backlinks and social shares even better.